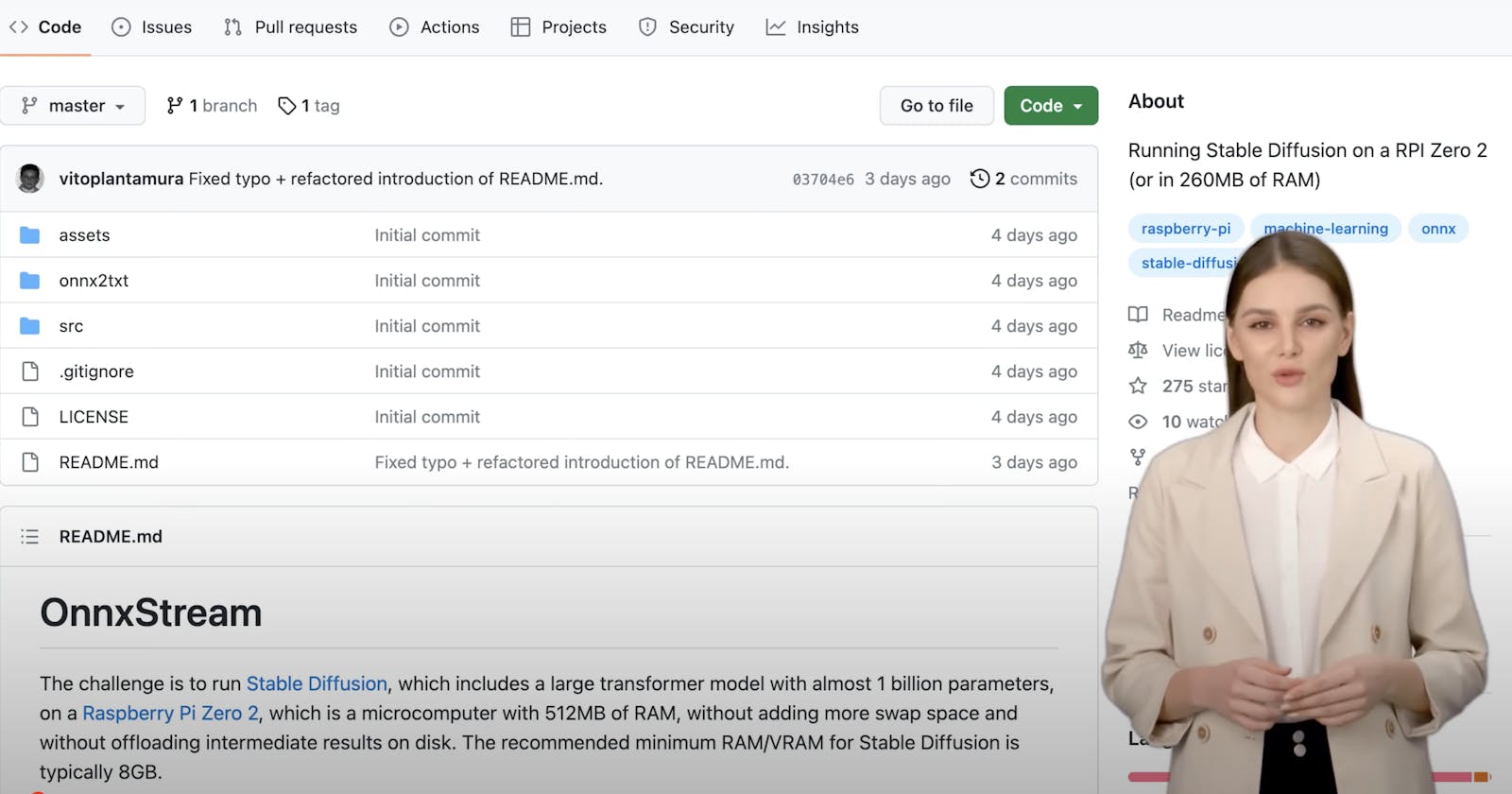

Github repo: https://github.com/WideSu/OnnxStream

Project idea: https://www.raspberrypi.com/news/creating-ai-art-with-raspberry-pi-magpimonday/

YouTube Video: https://youtu.be/NvJ4HtWQ_OY

Introduction

Localised GenAI model is the future for data privacy and security. But the expensive computing resources stoped many people from developing their own GenAI models. I saw an interesting project on GitHub which enabled running stable diffusion model on Rasberry Pi Zero with limited memory and computing resources by using attention slicing and quantization.

The project I saw is called OnnxStream, it makes use of XNNPACK to optimise how Stable Diffusion generates AI imagery. It was tested was using Raspberry Pi OS Lite 64-bit on Raspberry Pi Zero 2 W. Vito’s GitHub has more details on setting up OnnxStream on a Raspberry Pi with “every KB of RAM needed to run Stable Diffusion.” I tried to run it on my rasberry pi 4B which has 8GB ram and wrote this step-to-step guide to help people who are interested to build their own localised diffusion model.

In just 1.5 hours, OnnxStream produced a near identical output on Raspberry Pi Zero 2 to a PC

Less memory usage than other stable diffusion models

This table shows the various inference times of the three models of Stable Diffusion 1.5, together with the memory consumption (i.e. the Peak Working Set Size in Windows or the Maximum Resident Set Size in Linux).

| Model / Library | 1st run | 2nd run | 3rd run |

| FP16 UNET / OnnxStream | 0.133 GB - 18.2 secs | 0.133 GB - 18.7 secs | 0.133 GB - 19.8 secs |

| FP16 UNET / OnnxRuntime | 5.085 GB - 12.8 secs | 7.353 GB - 7.28 secs | 7.353 GB - 7.96 secs |

| FP32 Text Enc / OnnxStream | 0.147 GB - 1.26 secs | 0.147 GB - 1.19 secs | 0.147 GB - 1.19 secs |

| FP32 Text Enc / OnnxRuntime | 0.641 GB - 1.02 secs | 0.641 GB - 0.06 secs | 0.641 GB - 0.07 secs |

| FP32 VAE Dec / OnnxStream | 1.004 GB - 20.9 secs | 1.004 GB - 20.6 secs | 1.004 GB - 21.2 secs |

| FP32 VAE Dec / OnnxRuntime | 1.330 GB - 11.2 secs | 2.026 GB - 10.1 secs | 2.026 GB - 11.1 secs |

In the case of the UNET model (when run in FP16 precision, with FP16 arithmetic enabled in OnnxStream), OnnxStream can consume even 55x less memory than OnnxRuntime with a 50% to 200% increase in latency.

1.Perquisites

Summary of the bash command for setting up environment for building OnnxStream diffusion model on Rasberry pi

apt install git

apt install cmake

apt install tmux

wget "https://github.com/conda-forge/miniforge/releases/latest/download/Miniforge3-$(uname)-$(uname -m).sh"

bash Miniforge3-Linux-aarch64.sh

export PATH="${PATH}:/root/miniforge3/bin"

conda install -c conda-forge jupyterlab

conda install ipykernel

jupyter lab --allow-root --ip="your-ip" --port=8082 --log-level=40 --no-browser

1.1 Install Git

apt install git

1.2 Install tmux

apt install tmux

1.3 Install miniconda

for installing miniconda on dietpi os:

wget "https://github.com/conda-forge/miniforge/releases/latest/download/Miniforge3-$(uname)-$(uname -m).sh"

bash Miniforge3-Linux-aarch64.sh

add conda to system path

export PATH="${PATH}:/root/miniforge3/bin"

1.4 Install jupyterlab using miniconda

conda install -c conda-forge jupyterlab

1.5 Install ipykernal

conda install ipykernel

1.6 Launch jupyter lab on Rasberry pi

jupyter lab --NotebookApp.token='' --NotebookApp.password=''

jupyter lab --allow-root --ip="your-ip" --port=8082 --log-level=40 --no-browser

1.7 Copy the URL to your browser to access jupyter lab on Rasbberry pi

2.Build diffusion Model

2.1 Cline XNNPACK project

git clone https://github.com/google/XNNPACK.git

cd XNNPACK

git rev-list -n 1 --before="2023-06-27 00:00" master

git checkout <COMMIT_ID_FROM_THE_PREVIOUS_COMMAND>

mkdir build

cd build

cmake -DXNNPACK_BUILD_TESTS=OFF -DXNNPACK_BUILD_BENCHMARKS=OFF ..

cmake --build . --config Release

2.2 Clone OnnxStream project

Change <directory_where_xnnpack_was_cloned> to the folder of your XNNPACK clone path.

git clone https://github.com/WideSu/OnnxStream.git

cd OnnxStream

cd src

mkdir build

cd build

cmake -DMAX_SPEED=ON -DXNNPACK_DIR=<DIRECTORY_WHERE_XNNPACK_WAS_CLONED> ..

cmake --build . --config Release

Exporting Your Model

Option A: Exporting from Hugging Face (Recommended)

from diffusers import StableDiffusionPipeline

import torch

pipe = StableDiffusionPipeline.from_single_file("https://huggingface.co/YourUsername/YourModel/blob/main/Model.safetensors")

dummy_input = (torch.randn(1, 4, 64, 64), torch.randn(1), torch.randn(1, 77, 768))

input_names = ["sample", "timestep", "encoder_hidden_states"]

output_names = ["out_sample"]

torch.onnx.export(pipe.unet, dummy_input, "/path/to/save/unet_temp.onnx", verbose=False, input_names=input_names, output_names=output_names, opset_version=14, do_constant_folding=True, export_params=True)

Option B: Manually Fixing Input Shapes

python -m onnxruntime.tools.make_dynamic_shape_fixed --input_name sample --input_shape 1,4,64,64 model.onnx model_fixed1.onnx

python -m onnxruntime.tools.make_dynamic_shape_fixed --input_name timestep --input_shape 1 model_fixed1.onnx model_fixed2.onnx

python -m onnxruntime.tools.make_dynamic_shape_fixed --input_name encoder_hidden_states --input_shape 1,77,768 model_fixed2.onnx model_fixed3.onnx

Note by Vito: This can be achieved simply by following the approach outlined in "Option A" above, which remains the recommended approach. Making the input shapes fixed might be useful if your starting point is already an ONNX file.

Running ONNX Simplifier

python -m onnx_simplifier model_fixed3.onnx model_simplified.onnx

Note:

If you exported your model from Hugging Face, you'll need around 100GB of swap space.

If you manually fixed the input shapes, 16GB of RAM should suffice.

The process may take some time; please be patient.

Final Steps and Running the Model

Once you have the final model from onnx2txt, move it into the unet_fp16 folder of the standard SD 1.5 model, which can be found in the Windows release of OnnxStream.

The command to run the model might look like this:

./sd --models-path ./Converted/ --prompt "space landscape" --steps 28 --rpi

Note on the "Shape" Operator

If you see the "Shape" operator in the output of Onnx Simplifier or in onnx2txt.ipynb, it indicates that Onnx Simplifier may not be functioning as expected. This issue is often not caused by Onnx Simplifier itself but rather by Onnx's Shape Inference.

Alternative Solution

In such cases, you have the alternative to re-export the model by modifying the parameters of torch.onnx.export. Locate this file on your computer:

And make sure to:

Set

opset_versionto 14Remove

dynamic_axes

After making these changes, you can rerun Onnx Simplifier and onnx2txt.

Note by Vito: This solution, although working, generates ONNX files with Einsum operations. When OnnxStream supports the Einsum operator, this solution will become the recommended one.

Conclusion

This guide is designed to be a comprehensive resource for those looking to run a custom Stable Diffusion 1.5 model with OnnxStream. Additional contributions are welcome!

Related Projects

OnnxStreamGui by @ThomAce: a web and desktop user interface for OnnxStream.

Auto epaper art by @rvdveen: a self-contained image generation picture frame showing news.